Figure l. State diagram for rubber-banding with a one-button mouse.

Issues and Techniques in

William Buxton

Touch-Sensitive Tablet Input

Ralph Hill

Peter RowleyComputer Systema Research Institute

University of Toronto

Toronto, Ontario

Canada MSS 1A4

Abstract

Touch-sensitive tablets and their use in human- computer interaction are discussed. It is shown that such devices have some important properties that differentiate them from other input devices (such as mice and joysticks). The analysis serves two purposes: (1) it sheds light on touch tablets, and (2) it demonstrates how other devices might be approached. Three specific distinctions between touch tablets and one button mice are drawn. These concern the signaling of events, multiple point sensing and the use of templates. These distinctions are reinforced, and possible uses of touch tablets are illustrated, in an example application. Potential enhancements to touch tablets and other input devices are discussed. as are some inherent problems. The paper concludes with recommendations for future work.CR Categories and Subject Descriptors: I.3.1 [Computer Graphics]: Hardware Architecture: Input Devices. 1.3.6 [Computer Graphics]: Methodology and Techniques: Device Independence, Ergonomics, Interaction Techniques.

General Terms: Design, Human Factors.

Additional Keywords and Phrases: touch sensitive input devices.

Now that the range of available devices is expanding, how does one select the best technology for a particular application? And once a technology is chosen, how can it be used most effectively? These questions are important, for as Buxton [1983] has argued, the ways in which the user physically interacts with an input device have a marked effect on the type of user interface that can be effectively supported.

In the general sense, the objective of this paper is to help in the selection process and assist in effective use of a specific class of devices. Our approach is to investigate a specific class of devices: touch-sensitive tablets. We will identify touch tablets, enumerate their important properties, and compare them to a more common input device, the mouse. We then go on to give examples of transactions where touch tablets can be used effectively. There are two intended benefits for this approach. First, the reader will acquire an understanding of touch tablet issues. Second, the reader will have a concrete example of how the technology can be investigated, and can utilize the approach as a model for investigating other classes of devices.

What we have described in the previous paragraph is a simple touch tablet. Only one point of contact is sensed, and then only in a binary, touch/no touch, mode. One way to extend the potential of a simple touch tablet is to sense the degree, or pressure, of contact. Another is to sense multiple points of contact. In this case, the location (and possibly pressure) of several points of contact would be reported. Most tablets currently on the market are of the "simple" variety. However, Lee, Buxton and Smith [1985], and Nakatani [private communication] have developed prototypes of multi-touch, multi-pressure sensing tablets.

We wish to stress that we will restrict our discussion of touch technologies to touch tablets, which can and should be used in ways that are different from touch screens. Readers interested in touch- screen technology are referred to Herot & Weinsapfel [1978], Nakatani & Rohrlich [1983] and Minsky [1984]. We acknowledge that a flat touch screen mounted horizontally is a touch tablet as defined above. This is not a contradiction, as a touch screen has exactly the properties of touch tablets we describe below, as long as there is no attempt to mount a display below (or behind) it or to make it the center of the user's visual focus.

Touch tablets have a number of properties that distinguish them from other devices:

In the next section we will make three important distinctions between touch tablets and mice. These are:

Finally, we discuss improvements that must be made to current touch tablet technology, many of which we have demonstrated in prototype form. Also, we suggest potential improvements to other devices, motivated by our experience with touch technology.

Signaling

Consider a rubber-band line drawing task with a one button mouse. The

user would first position the tracking symbol at the desired starting point

of the line by moving the mouse with the button released. The button would

then be depressed, to signal the start of the line, and the user would

manipulate the line by moving the mouse until the desired length and orientation

was achieved. The completion of the line could then be signaled by releasing

the button.[2]

Figure l is a state diagram that represents this interface. Notice that the button press and release are used to signal the beginning and end of the rubber-band drawing task. Also note that in states l and 2 both motion and signaling (by pressing or releasing the button, as appropriate) are possible.

Figure l. State diagram for rubber-banding with a one-button mouse.

Now consider a simple touch tablet. It can be used to position the tracking symbol at the starting point of the line, but it cannot generate the signal needed to initiate rubber-banding. Figure 2 is a state diagram representation of the capabilities of a simple touch tablet. In State 0, there is no contact with the tablet.[3] In this state only one action is possible: the user may touch the tablet. This causes a change to state l. In state l, the user is pressing on the tablet, and as a consequence position reports are sent to the host. There is no way to signal a change to some other state, other than to release (assuming the exclusion of temporal or spatial cues, which tend to be clumsy and difficult to learn). This returns the system to State 0. This signal could not be used to initiate rubber-banding, as it could also mean that the user is pausing to think, or wishes to initiate some other activity.

Figure 2. Diagram for showing states ofsimple touch-tablet.

This inability to signal while pointing is a severe limitation with current touch tablets, that is, tablets that do not report pressure in addition to location. (It is also a property of trackballs, and joysticks without "flre" buttons). It renders them unsuitable for use in many common interaction techniques for which mice are well adapted (e.g., selecting and dragging objects into position, rubber-band line drawing, and pop-up menu selection); techniques that are especially characteristic of interfaces based on Direct Manipulation [Shneiderman l983].

One solution to the problem is to use a separate function button on the keyboard. However, this usually means two-handed input where one could do, or, awkward co-ordination in controlling the button and pointing device with a single hand. An alternative solution when using a touch tablet is to provide some level of pressure sensing. For example, if the tablet could report two levels of contact pressure (i.e., hard and soft), then the transition from soft to hard pressure, and vice versa, could be used for signaling. In effect, pressing hard is equivalent to pressing the button on the mouse. The state diagram showing the rubber-band line drawing task with this form of touch tablet is shown in Figure 3.[4]

Figure 3. State diagram for rubber-banding with pressure sensing

touch tablet.

As an aside, using this pressure sensing scheme would permit us to select options from a menu, or activate light buttons by positioning the tracking symbol over the Item and "pushing". This is consistent with the gesture used with a mouse, and the model of "pushing" buttons. With current simple touch tablets, one does just the opposite: position over the item and then lift off, or "pull" the button.

From the perspective of the signals sent to the host computer, this touch tablet is capable of duplicating the behaviour of a one-button mouse. This is not to say that these devices are equivalent or interchangeable. They are not. They are physically and kinesthetically very different, and should be used in ways that make use of the unique properties of each. Furthermore, such a touch tablet can generate one pair of signals that the one-button mouse cannot specifically, press and release (transition to and from state O in the above diagrams). These signals (which are also available with many conventional tablets) are very useful in implementing certain types of transactions, such as those based on character recognition.

An obvious extension of the pressure sensing concept is to allow continuous pressure sensing. That is, pressure sensing where some large number of different levels of pressure may be reported. This extends the capability of the touch tablet beyond that of a traditional one button mouse. An example of the use of this feature is presented below.

Multiple Position Sensing

With a traditional mouse or tablet, only one position can be reported

per device. One can imagine using two mice or possibly two transducers

on a tablet, but this increases costs, and two is the practical limit on

the number of mice or tablets that can be operated by a single user (without

using feet). However, while we have only two hands, we have ten fingers.

As playing the piano illustrates, there are some contexts where we might

want to use several, or even all of them, at once.

Touch tablets need not restrict us in this regard. Given a large enough surface of the appropriate technology, one could use all fingers of both hands simultaneously, thus providing ten separate units of input. Clearly, this is well beyond the demands of many applications and the capacity of many people, however, there are exceptions. Examples include chording on buttons or switches, operating a set of slide potentiometers, and simple key roll-over when touch typing. One example (using a set of slide potentiometers) will be illustrated below.

Multiple Virtual Devices and Templates

The power of modern graphics displays has been enhanced by partitioning

one physical display into a number of virtual displays. To support this,

display window managers have been developed. We claim (see Brown, Buxton

and Murtagh [1990]) that similar benefits can be gained by developing an

input window manager that permits a single physical input device to be

partitioned into a number of virtual input devices. Furthermore, we claim

that multitouch tablets are well suited to supporting this approach.

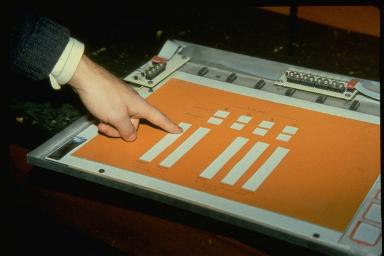

Figure 4a. Sample template

Figure 4b. Sample template in use.

Figure 4a shows a thick cardboard sheet that has holes cut in specific

places. When it is placed over a touch tablet as shown in Figure 4b, the

user is restricted to touching only certain parts of the tablet. More importantly,

the user can feel the parts that are touchable, and their shape.

Each of the "touchable" regions represents a separate virtual device. The

distinction between this template and traditional tablet mounted menus

(such as seen in many CAD systems) is important.

Traditionally, the options have been:

The example paint program allows the creation of simple finger paintings. The layout of the main display for the program is shown in Figure 5. On the left is a large drawing area where the user can draw simple free-hand figures. On the right is a set of menu items. When the lowest item is selected, the user enters a colour mixing mode. In switching to this mode, the user is presented with a different display that is discussed below. The remaining menu items are "paint pots". They are used to select the colour that the user will be painting with.

In each of the following versions of the program, the input requirements are slightly different. In all cases an 8 cm x 8 cm touch tablet is used (Figure 6), 1but the pressure sensing requirements vary. These are noted in each demonstration.

Figure 5. Main display for paint program.

Figure 6. Touch tablet used in demonstrations.

5.1. Painting Without Pressure Sensing

This version of the paint program illustrates the limitation of having

no pressure sensing. Consider the paint program described above, where

the only input device is a touch tablet without pressure sensing. Menu

selections could be made by pressing down somewhere in the menu area, moving

the tracking symbol to the desired menu item and then selecting by releasing.

To paint, the user would simply press down in the drawing area and move

(see Figure 7 for a representation of the signals used for painting with

this program).

There are several problems with this program. The most obvious is in trying to do detailed drawings. The user does not know where the paint will appear until it appears. This is likely to be too late. Some form of feedback, that shows the user where the brush is, without painting, is needed. Unfortunately, this cannot be done with this input device, as it is not possible to signal the change from tracking to painting and vice versa.

Figure 7. State diagram for drawing portion of simple paint

program.

The simplest solution to this problem is to use a button (e.g., a function key on the keyboard) to signal state changes. The problem with this solution is the need to use two hands on two different devices to do one task. This is awkward and requires practice to develop the coordination needed to make small rapid strokes in the painting. It is also inefficient in its use of two hands where one could (and normally should) do.

Alternatively, approaches using multiple taps or timing cues for signalling could be tried; however, we have found that these invariably lead to other problems. It is better to find a direct solution using the properties of the device itself.

5.2. Painting with Two levels of Pressure

This version of the program uses a tablet that reports two levels of

contact pressure to provide a satisfactory solution to the signaling problem.

A low pressure level (a light touch by the user) is used for general tracking.

A heavier touch is used to make menu selections, or to enable painting

(see Figure 8 for the tablet states used to control painting with this

program). The two levels of contact pressure allow us to make a simple

but practical one finger paint program.

Figure 8. State diagram for painting portion of simple paint

program

using pressure sensing touch tablet.

This version is very much like using the one button mouse on the Apple

Macintosh with MacPaint [Williams, 1984]. Thus, a simple touch tablet is

not very useful, but one that reports two levels of pressure is similar

in power (but not feel or applicability) to a one button mouse.[5]

5.3. Painting with Continuous Pressure Sensing

In the previous demonstrations, we have only implemented interaction

techniques that are common using existing technology. We now introduce

a technique that provides functionality beyond that obtainable using most

conventional input technologies.

In this technique, we utilize a tablet'capable of sensing a continuous range of touch pressure. With this additional signal, the user can control both the width of the paint trail and its path, using only one finger. The new signal, pressure, is used to control width. This is a technique that cannot be used with any mouse that we are aware of, and to our knowledge, is available on only one conventional tablet (the GTCO Digipad with pressure pen [GTCC 1982]).

We have found that using current pressure sensing tablets, the user can accurately supply two to three bits of pressure information, after about 15 minutes practice. This is sufficient for simple doodling and many other applications, but improved pressure resolution is required for high quality painting.

5.4. "Windows" on the Tablet: Colour Selection

We now demonstrate how the surface of the touch tablet can be dynamically

partitioned into "windows" onto virtual input devices. We use the same

basic techniques as discussed under templates (above), but show how to

use them without templates. We do this in the context of a colour selection

module for our paint program. This module introduces a new display, shown

in Figure 9.

Figure 9. Colour mixing display.

In this display, the large left side consists of a colour patch surrounded by a neutral grey border. This is the patch of colour the user is working on. The right side of the display contains three bar graphs with two light buttons underneath. The primary function of the bar graphs is to provide feedback, representing relative proportions of red, green and blue in the colour patch. Along with the light buttons below, they also serve to remind the user of the current layout of the touch tablet.

In this module, the touch tablet is used as a "virtual operating console". Its layout is shown (to scale) in Figure 10. There are 3 valuators (corresponding to the bar graphs on the screen) used to control colour, and two buttons: one, on the right, to bring up a pop-up menu used to select the colour to be modified, and another, on the left, to exit.

Figure 10. Layout of virtual devices on 8 cm x 8 cm touch tablet.

The single most important point to be made in this example is that a single physical device is being used to implement 5 virtual devices (3 valuators and 2 buttons). This is analogous to the use of a display window system, in its goals, and its implementation.

The second main point is that there is nothing on the tablet to delimit the regions. This differs from the use of physical templates as previously discussed, and shows how, in the absence of the need for a physical template, we can instantly change the "windows" on the tablet, without sacrificing the ability to touch type.

We have found that when the tablet surface is small, and the partioning of the surfaces is not too complex, the users very quickly (typically in one or two minutes) learn the positions of the virtual devices relative to the edges of the tablet. More importantly, they can use the virtual devices, practically error free, without diverting attention from the display. (We have repeatedly observed this behaviour in the use of an application that uses a 10 cm square tablet that is divided into 3 sliders with a single button across the top).

Because no template is needed, there is no need for the user to pause to change a template when entering the colour mixing module. Also, at no point is the user's attention diverted from the display. These advantages cannot be achieved with any other device we know of, without consuming display real estate.

The colour of the colour patch is manipulated by draggng the red, green and blue values up and down with the valuators on the touch tablet. The valuators are implemented in relative mode (i.e., they are sensitive to changes in position, not absolute position), and are manipulated like one dimensional mice. For example, to make the patch more red, the user presses near the left side of the tablet, about half way to the top, and slides the finger up (see Figure 11). For larger changes, the device can be repeatedly stroked (much like stroking a mouse). Feedback is provided by changing the level in the bar graph on the screen and the colour

Figure 11. Increasing red content, by pressing on red valuator

and sliding up.

Using a mouse, the above interaction could be approximated by placing the tracking symbol over the bars of colour, and dragging them up or down. However, if the bars are narrow, this takes visual acuity and concentration that distracts attention from the primary task - monitoring the colour of the patch. Furthermore, note that the touch tablet implementation does not need the bars to be displayed at all. They are only a convenience to the user. There are interfaces where, in the interests of maximizing available display area, there will be no items on the display analogous to these bars. That is, there would be nothing on the display to support an interaction technique that allows values to be manipulated by a mouse.

Finally, we can take the example one step further by introducing the use of a touch tablet that can sense multiple points of contact (e.g., [Lee, et al. 1985)). With this technology, all three colour values could be changed at the same time (for example, fading to black by drawing all three sliders down together with three fingers of one hand). This simultaneous adjustment of colours could not be supported by a mouse, nor any single commercially available input device we know of. Controlling several valuators with one hand is common in many operating consoles, for example: studio light control, audio mixers, and throttles for multi-engine vehicles (e.g., aircraft and boats). Hence, this example demonstrates a cost effective method for providing functionality that is currently unavailable (or available only at great cost, in the form of a custom fabricated console), but has wide applicability.

5.5. Summary of Examples

Through these simple examples, we have demonstrated several things:

Perhaps the most difficult problem is providing good feedback to the user when using touch tablets. For example, if a set of push-on/push-off buttons are being simulated, the traditional forms of feedback (illuminated buttons or different button heights) cannot be used. Also, buttons and other controls implemented on touch tablets lack the kinesthetic feel associated with real switches and knobs. As a result, users must be more attentive to visual and audio feedback, and interface designers must be freer in providing this feedback. (As an example of how this might be encouraged, the input "window manager" could automatically provide audible clicks as feedback for button presses).

This problem can be eliminated by modifying the firmware of the touch tablet controller so that it keeps a short FIFO queue of the samples that have most recently be sent to the host. When the user releases pressure, the oldest sample is retransmitted, and the queue is emptied. The length of the queue depends on the properties of the touch tablet (e.g., sensitivity, sampling rate). We have found that determining a suitable value requires only a few minutes of experimentation.

A related problem with most current tablet controllers (not just touch tablets) is that they do not inform the host computer when the user has ceased pressing on the tablet (or moved the puck out of range). This information is essential to the development of certain types of interfaces. (As already mentioned, this signal is not available from mice). Currently, one is reduced to deducing this event by timing the interval between samples sent by the tablet. Since the tablet controller can easily determine when pressure is removed (and must if it is to apply a de-jittering algorithm as above), it should share this information with the host.

Clearly, pressure sensing is an area open to development. Two pressure sensitive tablets have been developed at the University of Toronto [Sasaki, et al. 1981; Lee, et al. 1905]. One has been used to develop several experimental interfaces and was found to be a very powerful tool. They have recently become available from Elographics and Big Briar. Pressure sensing is not only for touch tablets. Mice, tablet pucks and styli could all benefit by augmenting switches with strain gauges, or other pressure sensing instruments. GTCO, for example, manufactures a stylus with a pressure sensing tip [GTCO 1982], and this, like our pressure sensing touch tablets, has proven very useful.

This being the case, we have enumerated three major distinctions between touch tablets and one button mice (although similar distinctions exist for multi-button mice and conventional tablets). These assist in identifying environments and applications where touch tablets would be most appropriate. These distinctions concern:

We hope that this paper motivates interface designers to consider the

use of touch tablets and shows some ways to use them effectively. Also,

we hope it encourages designers and manufacturers of input devices to develop

and market input devices with the enhancements that we have discussed.

The challenge for the future is to develop touch tablets that sense

continuous pressure at multiple points of contact and incorporate them

in practical interfaces. We believe that we have shown that this is worthwhile

and have shown some practical ways to use touch tablets. However, interface

designers must still do a great deal of work to determine where a mouse

is better than a touch tablet and vice versa.

Finally, we have illustrated, by example, an approach to the study of input devices, summarized by the credo: "Know the interactions a device is intended to participate in, and the strengths and weaknesses of the device." This approach stresses that there is no such thing as a "good input device," only good interaction task/device combinations.

Buxton, W. (1983). Lexical and pragmatic considerations of input structures. Computer Graphics, 17 (1), 31-37.

Buxton, W. (1986a) There's more to interaction than meets the eye: Some issues in manual input. In Norman, D. A. and Draper, S. W. (Eds.), (1986), User Centered System Design: New Perspectives on Human-Computer Interaction, Hillsdale, N.J.: Lawrence Erlbaum Associates, 319-337.

Buxton, W., Fiume, E., Hill, R., Lee, A. & Woo, C. (1983). Continuous Hand-Gesture Driven Input. Proceedings of Graphics Interface '83, Edmonton, May 1983, 191-195.

Card, S., Moran, T. & Newell, A. (1980). The Keystroke Level Model for User Performance Time with Interactive Systems, Communications of the ACM, 23(7), 396-410.

Foley, J.D., Wallace, V.L. & Chan, P. (1984). The Human Factors of Computer Graphics Interaction Techniques. IEEE Computer Graphics and Applications, 4 (11), 13-48.

GTCO (1982). DIGI-PAD 5 User's Manual. GTCO Corporation, 1055 First Street, Rockville, MD, 20850.

Herot, C. & Weinzapfel, G. (1978). One-Point Touch Input of Vector Information from Computer Displays, Computer Graphics, 12(3), 210-216.

Lee, S.K., Buxton, W. & Smith, K.C. (1985). A Multi-Touch Three Dimensional Touch-Sensitive Tablet, Proceedings of CHI' 85, 21-27.

Minsky, M. (1985). Manipulating Simulated Objects with Real-World Gestures Using a Force and Position Sensitive Screen, Computer Graphics, 18(3), 195-203.

Nakatani, L., Rohrlich, J.A. (1983). Soft Machines: A Philosophy of User Computer Interface Design, Proceedings of CHI'83, 19-23.

Sasaki, L., Fedorkow, G., Buxton, W., Retterath, C. & Smith, K.C. (1981). A Touch Sensitive Input Device. Proceedings of the 5th International Conference on Computer Music.

Shneiderman, B. (1983). Direct Manipulation: A Step Beyond Programming Languages. IEEE Computer, 16(8), 57 - 69.

[2] This assumes that the Interface is designed so that the button Is held down during drawing. Alternatively, the button can be released during drawing, and pressed agaIn, to signal the completion of the line.

[3] We use state O to represent a state in which no location, information is transmitted. There no analogous state for mice, and hence no state O In the diagrams for mice. With conventional tablets, this corresponds to "out of range" state.

At this point the alert reader will wonder about diimculty in distinguishing between hard and soft pressure. and friction (especially when pressing hard). Taking tha last first, hard is a relative term. In practice friction need not be a problem (ice Inherent Problems, below).

[4] 0ne would conjecture that in the absence of button clicks or other feedback, pressure would be difficult to regulate accurately. We have found two levels of pressure to be easily distinguished, but this is a ripe area for research. For example, Stu Card [private communication] has suggested that the threshold between soft and hard should be reduced (become "softer") while hard pressure is being maintained. This suggestion, and others, warrant formal experimentation.

[5] Also, there is the problem of frictIon, to be discussed below under "Inherent Problems".

6 As a bad axanople, one commercial "touch" tablet requires so much

pressure for relIable sensIng that the nnger cannot be smoothly dragged

across the surface. Instead, a wooden or plastic stylus must be used, thus

loosing many of the advantages of touch sensing.